Serving GenAI models has two big problems: GPU scarcity and high costs.

- GPU shortage: AI deployments often run out of capacity in a single region (or a data center). However, existing serving solutions (e.g., Kubernetes) focus on a single region or even a single zone, which means GenAI workloads often fail to autoscale due to capacity shortage.

- High costs: GPU instances are usually significantly more expensive than CPU instances, especially when you are tied to a single cloud provider. For an A100-80GB instance, the cost difference can be up to 30% across the big three clouds.

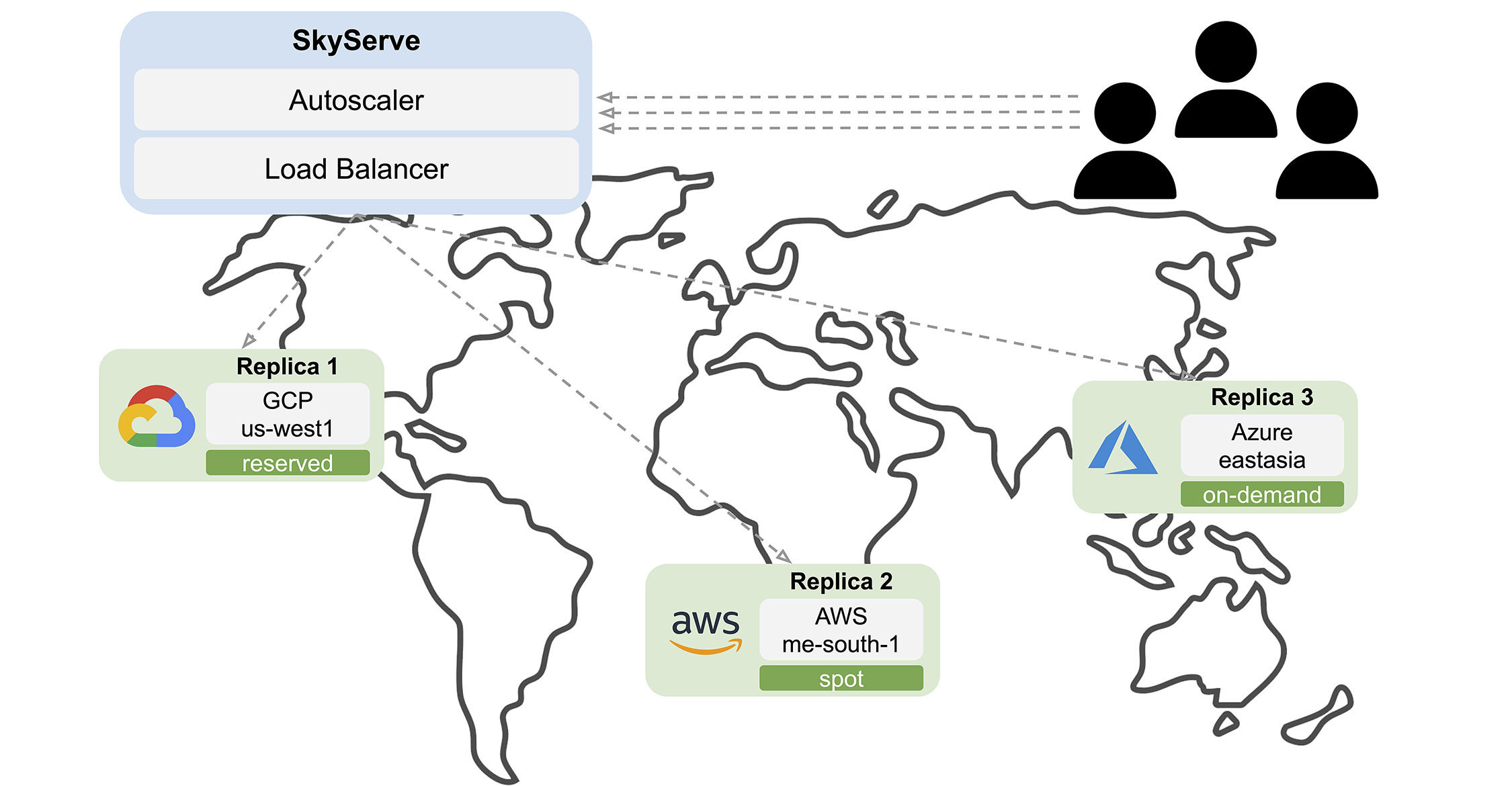

To mitigate these problems, we introduce SkyServe, a simple, cost-efficient, multi-region, multi-cloud library for serving GenAI models, which brings ~50% cost savings and offers the best GPU availability.

SkyServe: A multi-region, multi-cloud serving library

SkyServe is an open-source library that takes an existing serving framework and deploys it across one or more regions or clouds. SkyServe focuses on scaling GenAI models but also supports any service with HTTP endpoints, with intelligent support for reserved/on-demand/spot instances. Its goal is to make model deployment easier and cheaper, with a simple interface. SkyServe is being actively used by LMSys ChatBot Arena in production which has processed over 800,000 requests and served ~10 open LLMs globally.

Our key observation is that GPU cost and availability are not uniformly distributed across clouds and regions. Intelligent optimization to pick the right resources can serve GenAI reliably with reduced cost. Moreover, location-based optimizations are feasible because GenAI workloads are largely dominated by computation time or queueing delay, instead of the network latency for cross-region communication (at most 300ms for network latency vs multiple seconds for compute). That said, SkyServe fully supports deploying a service in a single zone/region/cloud.

Why SkyServe

- Bring any inference engine (vLLM, TGI, …) and serve it with out-of-box load-balancing and autoscaling.

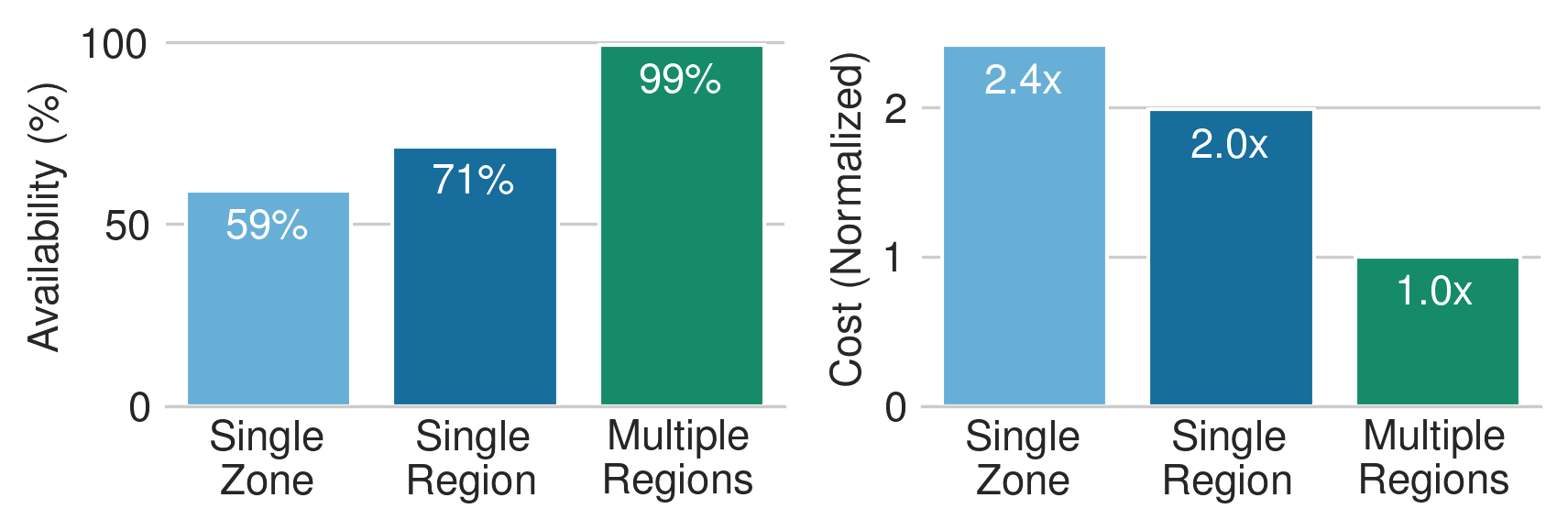

- Improve availability by leveraging different regions/clouds, and accelerators 1.

- Reduce costs by ~50% with automatic resources selection and spot instances 2.

- Simplify deployment with a unified service interface and automatic failure recovery.

How it works

Given an existing model inference engine (vLLM, TGI, …) that exposes HTTP endpoints, SkyServe deploys it across one or more regions or clouds. SkyServe will:

- Automatically figure out the locations (zone/region/cloud) with the cheapest resources and accelerators to use,

- Deploy and replicate the services on multiple “replicas”, and

- Expose a single endpoint that load-balances requests to a service’s replicas.

When any error or a spot preemption occurs, SkyServe will recover that replica by reoptimizing placement using the current price and availability.

Quick Tour

First, let’s install SkyPilot and check that cloud credentials are set up (see docs here):

pip install "skypilot-nightly[all]"

sky check

After installation, we can bring up a two-replica deployment of Mixtral 8x7b powered by the vLLM engine. To specify a service, define a Service YAML:

# mixtral.yaml

service:

readiness_probe: /v1/models

replicas: 2

# Fields below describe each replica.

resources:

ports: 8080

accelerators: {L4:8, A10g:8, A100:4, A100:8, A100-80GB:2, A100-80GB:4, A100-80GB:8}

setup: |

conda create -n vllm python=3.10 -y

conda activate vllm

pip install vllm==0.3.0 transformers==4.37.2

run: |

conda activate vllm

export PATH=$PATH:/sbin

python -m vllm.entrypoints.openai.api_server \

--tensor-parallel-size $SKYPILOT_NUM_GPUS_PER_NODE \

--host 0.0.0.0 --port 8080 \

--model mistralai/Mixtral-8x7B-Instruct-v0.1

Fields required by a Service YAML are the service section and the resources.ports field. In the former, we configure the service:

readiness_probe: path to check the status of the service on a replica. SkyServe will probe this path and check if the return code is 200 OK. The request can be a health check API exposed by the service such as/healthor an actual request. If it fails to get a response, SkyServe will mark this replica as not ready and stop routing traffic to that replica 3.replicas: target number of replicas in this deployment. This is a shortcut for a fixed-replica deployment. An autoscaling option will be shown in the following section.

Also, the accelerators section under resources specifies a “set” of resources to use. When launching a replica, SkyServe will pick the accelerator that is cheapest and available. Effectively, specifying a set of resources bumps up GPU availability.

Finally, resources.ports is specified for the port all replicas expose for the service running on it.

Let’s spin up this service with 1 command:

sky serve up mixtral.yaml -n mixtral

That’s all you need! This will show some outputs containing the following table:

Service from YAML spec: mixtral.yaml

Service Spec:

Readiness probe method: GET /v1/models

Readiness initial delay seconds: 1200

Replica autoscaling policy: Fixed 2 replicas

Each replica will use the following resources (estimated):

== Optimizer ==

Estimated cost: $7.3 / hour

Considered resources (1 node):

----------------------------------------------------------------------------------------------------------

CLOUD INSTANCE vCPUs Mem(GB) ACCELERATORS REGION/ZONE COST ($) CHOSEN

----------------------------------------------------------------------------------------------------------

Azure Standard_NC48ads_A100_v4 48 440 A100-80GB:2 eastus 7.35 ✔

GCP g2-standard-96 96 384 L4:8 us-east4-a 7.98

GCP a2-ultragpu-2g 24 340 A100-80GB:2 us-central1-a 10.06

GCP a2-highgpu-4g 48 340 A100:4 us-central1-a 14.69

Azure Standard_NC96ads_A100_v4 96 880 A100-80GB:4 eastus 14.69

AWS g5.48xlarge 192 768 A10G:8 us-east-1 16.29

GCP a2-ultragpu-4g 48 680 A100-80GB:4 us-central1-a 20.11

Azure Standard_ND96asr_v4 96 900 A100:8 eastus 27.20

GCP a2-highgpu-8g 96 680 A100:8 us-central1-a 29.39

Azure Standard_ND96amsr_A100_v4 96 1924 A100-80GB:8 eastus 32.77

AWS p4d.24xlarge 96 1152 A100:8 us-east-1 32.77

GCP a2-ultragpu-8g 96 1360 A100-80GB:8 us-central1-a 40.22

AWS p4de.24xlarge 96 1152 A100-80GB:8 us-east-1 40.97

----------------------------------------------------------------------------------------------------------

Launching a new service 'mixtral'. Proceed? [Y/n]:

Here, all possible resources are shown across AWS, GCP, and Azure, cross with all possible accelerators listed in the YAML. After confirmation, SkyServe takes care of replica management, load-balancing, and optional autoscaling, with a SKyServe Controller VM.

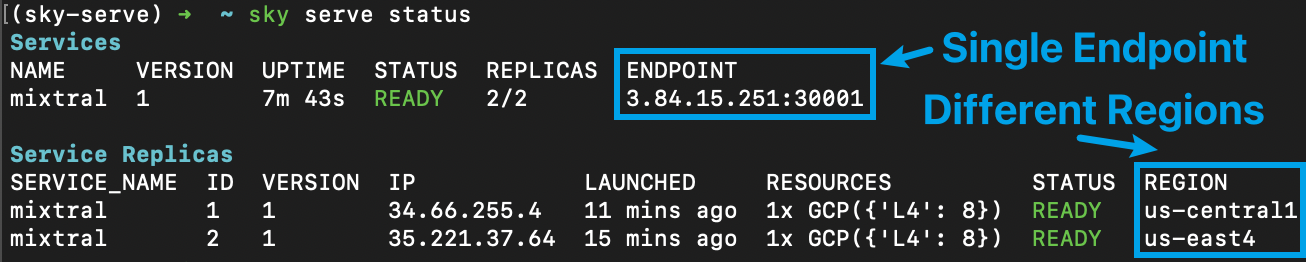

You can use sky serve status to check the service status. After the service’s status becomes READY, it can start handling traffic:

Note that the two replicas were launched in different regions for the lowest cost and highest GPU availability. In general, replicas can also be hosted on different cloud providers if a single cloud does not have enough GPU capacity. (See all cloud providers SkyPilot supports here.)

SkyServe generates a single service endpoint for all the replicas. This endpoint is load-balanced over all replicas by the SkyServe controller, and a simple curl -L against this endpoint will automatically be routed to one of the replicas (-L is needed as we are using HTTP redirection under the hood):

$ curl -L 3.84.15.251:30001/v1/chat/completions \

-X POST \

-d '{"model": "mistralai/Mixtral-8x7B-Instruct-v0.1", "messages": [{"role": "user", "content": "Who are you?"}]}' \

-H 'Content-Type: application/json'

# Example output:

{"id":"cmpl-80b2bfd6f60c4024884c337a7e0d859a","object":"chat.completion","created":1005,"model":"mistralai/Mixtral-8x7B-Instruct-v0.1","choices":[{"index":0,"message":{"role":"assistant","content":" I am a helpful AI assistant designed to provide information, answer questions, and engage in conversation with users. I do not have personal experiences or emotions, but I am programmed to understand and process human language, and to provide helpful and accurate responses."},"finish_reason":"stop"}],"usage":{"prompt_tokens":13,"total_tokens":64,"completion_tokens":51}}

Congratulations! You have deployed the Mixtral model with 2 nodes. Next, let’s look at some advanced configurations in SkyServe to further improve the service.

Advanced Configurations

Readiness probe

In the previous example, we used the /v1/models endpoint to double up as a health check endpoint. SkyServe periodically sends a GET request and expects a 200 OK which the system will interpret as “replica is ready”.

In the previous configuration, readiness_probe: <path> is a shorthand for the following specification, which is an HTTP GET request to the path:

service:

readiness_probe:

path: <path>

SkyServe also allows sending a POST request as the readiness probe. This can be useful for several reasons, e.g., ensuring the GPU can actually handle requests. To use real compute traffic as the probe, we can specify a POST request:

service:

readiness_probe:

path: /v1/chat/completions

post_data:

model: mistralai/Mixtral-8x7B-Instruct-v0.1

messages:

- role: user

content: Hello! What is your name?

max_tokens: 1

replicas: 2

With this, each readiness probe is a POST request for chat completion sent to a service replica. Although it may take a longer time to process the probe, this reflects the status of the system more accurately because the original /v1/models could succeed even though /v1/chat/completions is malfunctioning.

Due to the long cold start time of LLMs, e.g. installing dependencies, downloading models, and loading them to GPU memory, SkyServe ignores any initial readiness probe failure for 1200 seconds (or 20 minutes) by default. This can be configured as follows:

service:

readiness_probe:

initial_delay_seconds: 1800

To update the service with the new configuration, we can run:

sky serve update mixtral mixtral.yaml

The new mixtral.yaml with readiness probe updated

# mixtral.yaml

service:

readiness_probe:

path: /v1/chat/completions

post_data:

model: mistralai/Mixtral-8x7B-Instruct-v0.1

messages:

- role: user

content: Hello! What is your name?

max_tokens: 1

initial_delay_seconds: 1800

replicas: 2

# Fields below describe each replica.

resources:

ports: 8080

accelerators: {L4:8, A10g:8, A100:4, A100:8, A100-80GB:2, A100-80GB:4, A100-80GB:8}

setup: |

conda create -n vllm python=3.10 -y

conda activate vllm

pip install vllm==0.3.0 transformers==4.37.2

run: |

conda activate vllm

export PATH=$PATH:/sbin

python -m vllm.entrypoints.openai.api_server \

--tensor-parallel-size $SKYPILOT_NUM_GPUS_PER_NODE \

--host 0.0.0.0 --port 8080 \

--model mistralai/Mixtral-8x7B-Instruct-v0.1

Autoscaling

The previous example uses a simple deployment with a fixed number of replicas. This might not be ideal if the traffic shows large fluctuations (e.g., day vs. night traffic).

SkyServe supports autoscaling out of the box, based on the dynamic load (queries per second, or QPS) to the endpoint.

To enable autoscaling, you can specify some additional configurations by replacing the replicas field with a replica_policy config:

service:

readiness_probe: ...

replica_policy:

min_replicas: 0

max_replicas: 10

target_qps_per_replica: 2.5

min_replicasandmax_replicasdetermine the lower and upper bound of the number of replicas.target_qps_per_replicais the threshold SkyServe uses to autoscale – the QPS (Queries Per Second) each service replica should handle in expectation. SkyServe will scale the service to ensure, each replica receives approximatelytarget_qps_per_replicaqueries per second.

Note that we set the min_replicas to 0, so that SkyServe can scale-to-zero to save costs when no requests are received for a period of time.

More details for autoscaling can be found in our doc.

To update the previous service to support autoscaling, run:

sky serve update mixtral mixtral.yaml

mixtral.yaml with advanced readiness probe and autoscaling

# mixtral.yaml

service:

readiness_probe:

path: /v1/chat/completions

post_data:

model: mistralai/Mixtral-8x7B-Instruct-v0.1

messages:

- role: user

content: Hello! What is your name?

max_tokens: 1

initial_delay_seconds: 1800

replica_policy:

min_replicas: 0

max_replicas: 10

target_qps_per_replica: 2.5

# Fields below describe each replica.

resources:

ports: 8080

accelerators: {L4:8, A10g:8, A100:4, A100:8, A100-80GB:2, A100-80GB:4, A100-80GB:8}

setup: |

conda create -n vllm python=3.10 -y

conda activate vllm

pip install vllm==0.3.0 transformers==4.37.2

run: |

conda activate vllm

export PATH=$PATH:/sbin

python -m vllm.entrypoints.openai.api_server \

--tensor-parallel-size $SKYPILOT_NUM_GPUS_PER_NODE \

--host 0.0.0.0 --port 8080 \

--model mistralai/Mixtral-8x7B-Instruct-v0.1

Managed Spot Instances: ~50% cost saving

The previous examples use On-Demand instances as service replicas. On-demand instances can be quite costly due to the nature of the expensive high-end GPU instances.

Spot instances, however, can offer more than 3x cost savings, though being less reliable due to preemptions and more scarce across regions/clouds. The stateless nature of model serving makes it suitable to run on spot instances. With SkyServe, a service can easily be deployed on spot instances with automatic preemption recovery, while enjoying the capacity across multiple regions/clouds.

By specifying use_spot: true in the resources section, SkyServe will launch spot instances for your service and auto-manage them:

resources:

use_spot: true

accelerators: {L4:8, A10g:8, A100:4, A100:8, A100-80GB:2, A100-80GB:4, A100-80GB:8}

With more regions, clouds, and accelerator types enabled, SkyServe could search for spot instances across multiple resource pools, which significantly increases spot instance availability.

In addition, we suggest increasing the number of replicas or decreasing target_qps_per_replica to over-provision some spot instances for better handling of preemptions. For example, replacing 2 OnDemand replicas with 3 Spot replicas gives:

- Better service level guarantee;

- 50% cost saving.

Stay tuned for an advanced policy currently being added to SkyServe that provides even lower costs and makes recovery more intelligent.

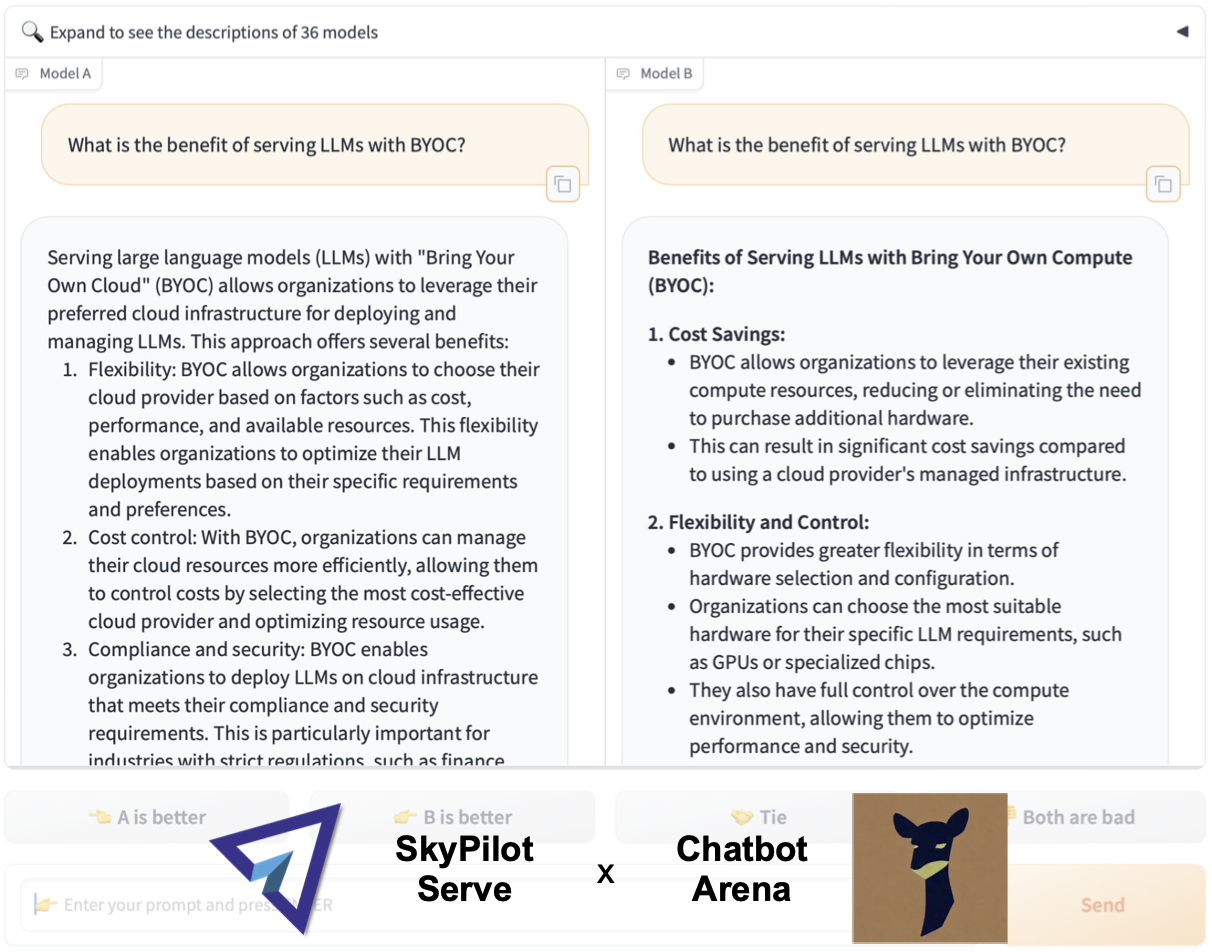

SkyServe x Chatbot Arena

SkyServe has been used by LMSys Chatbot Arena to serve ~10 open models (including Mixtral, Llama2 and more) and process over 800,000 requests globally over the last four months. We’re happy to see that SkyServe is offering real value-adds for an LLM service: simple deployment and upgrades, high cost savings, and automatic failure recovery.

Runnable Examples

SkyServe integrates with many popular open-source AI frameworks. Below are runnable examples that you can simply copy-paste and launch in your own cloud accounts.

- HuggingFace TGI: Any LLMs supported by the TGI framework can be easily launched. Simply switch models with an environment variable (the model ID)!

- Meta’s CodeLlama-70B: Use SkyServe to bring up your private CodeLlama-70B, and share it with your team via VSCode, API, and Chat endpoints! (Thanks to Tabby for the simple IDE integration.)

- vLLM: Easy, Fast, and Cheap LLM Serving with PagedAttention on SkyServe.

- SGLang: Try SGLang, the next-generation interface and runtime for LLM inference from LMSys, on SkyServe!

- Tabby: A light-weight code assistant that can be easily hosted with SkyServe and access it with VSCode!

- LoRAX: Easily use the Predibase LoRAX server to serve many hundreds of LoRAs.

Any OpenAI API-Compatible LLMs on HuggingFace TGI

sky serve up -n tgi-mistral huggingface-tgi.yaml --env MODEL_ID=mistralai/Mistral-7B-Instruct-v0.1

sky serve up -n tgi-vicuna huggingface-tgi.yaml --env MODEL_ID=lmsys/vicuna-13b-v1.5

Click to see huggingface-tgi.yaml and curl command

Learn more here.

# SkyServe YAML to run HuggingFace TGI

#

# Usage:

# sky serve up -n tgi huggingface-tgi.yaml

# Then visit the endpoint printed in the console. You could also

# check the endpoint by running:

# sky serve status --endpoint tgi

envs:

MODEL_ID: lmsys/vicuna-13b-v1.5

service:

readiness_probe: /health

replicas: 2

resources:

ports: 8082

accelerators: A100:1

run: |

docker run --gpus all --shm-size 1g -p 8082:80 \

-v ~/data:/data ghcr.io/huggingface/text-generation-inference \

--model-id $MODEL_ID

curl -L $(sky serve status tgi --endpoint)/generate \

-X POST \

-H 'Content-Type: application/json' \

-d '{

"inputs": "What is Deep Learning?",

"parameters": {

"max_new_tokens": 20

}

}'

# Example Output:

{"generated_text":"\n\nDeep learning is a subset of machine learning that uses artificial neural networks to model and solve"}

Hosting Code Llama 70B from Meta

sky serve up -n codellama codellama.yaml

Click to see codellama.yaml and curl command

Learn more here.

# An example yaml for serving Code Llama model from Meta with an OpenAI API.

# Usage:

# 1. Launch on a single instance: `sky launch -c code-llama codellama.yaml`

# 2. Scale up to multiple replicas with a single endpoint:

# `sky serve up -n code-llama codellama.yaml`

service:

readiness_probe:

path: /v1/completions

post_data:

model: codellama/CodeLlama-70b-Instruct-hf

prompt: "def hello_world():"

max_tokens: 1

initial_delay_seconds: 1800

replicas: 2

resources:

accelerators: {L4:8, A10g:8, A10:8, A100:4, A100:8, A100-80GB:2, A100-80GB:4, A100-80GB:8}

disk_size: 1024

disk_tier: best

memory: 32+

ports: 8000

setup: |

conda activate codellama

if [ $? -ne 0 ]; then

conda create -n codellama python=3.10 -y

conda activate codellama

fi

pip list | grep vllm || pip install "git+https://github.com/vllm-project/vllm.git"

pip install git+https://github.com/huggingface/transformers

run: |

conda activate codellama

export PATH=$PATH:/sbin

# Reduce --max-num-seqs to avoid OOM during loading model on L4:8

python -u -m vllm.entrypoints.openai.api_server \

--host 0.0.0.0 \

--model codellama/CodeLlama-70b-Instruct-hf \

--tensor-parallel-size $SKYPILOT_NUM_GPUS_PER_NODE \

--max-num-seqs 64 | tee ~/openai_api_server.log

curl -L $(sky serve status codellama --endpoint)/v1/completions \

-H "Content-Type: application/json" \

-d '{

"model": "codellama/CodeLlama-70b-Instruct-hf",

"prompt": "def quick_sort(a: List[int]):",

"max_tokens": 512

}'

# Example Output:

{"id":"cmpl-f0ceac768bf847269f64c2e5bc08126f","object":"text_completion","created":1770,"model":"codellama/CodeLlama-70b-Instruct-hf","choices":[{"index":0,"text":"\n if len(a) < 2:\n return a\n else:\n pivot = a[0]\n lesser = quick_sort([x for x in a[1:] if x < pivot])\n greater = quick_sort([x for x in a[1:] if x >= pivot])\n return lesser + pivot + greaterq\n \n","logprobs":null,"finish_reason":"stop"}],"usage":{"prompt_tokens":13,"total_tokens":97,"completion_tokens":84}}

vLLM: Serving Llama-2 with SOTA LLM Inference Engine

sky serve up -n vllm vllm.yaml --env HF_TOKEN=<your-huggingface-token>

Click to see vllm.yaml and curl command

Learn more here.

# SkyServe YAML for vLLM inference engine

#

# Usage:

# set your HF_TOKEN in the envs section or pass it in CLI

# sky serve up -n vllm vllm.yaml

# Then visit the endpoint printed in the console. You could also

# check the endpoint by running:

# sky serve status --endpoint vllm

service:

# Specifying the path to the endpoint to check the readiness of the service.

readiness_probe: /v1/models

# How many replicas to manage.

replicas: 2

envs:

MODEL_NAME: meta-llama/Llama-2-7b-chat-hf

HF_TOKEN: <your-huggingface-token> # Change to your own huggingface token

resources:

accelerators: {L4:1, A10G:1, A10:1, A100:1, A100-80GB:1}

ports:

- 8000

setup: |

conda activate vllm

if [ $? -ne 0 ]; then

conda create -n vllm python=3.10 -y

conda activate vllm

fi

pip list | grep vllm || pip install vllm==0.3.0

pip list | grep transformers || pip install transformers==4.37.2

python -c "import huggingface_hub; huggingface_hub.login('${HF_TOKEN}')"

run: |

conda activate vllm

echo 'Starting vllm openai api server...'

python -m vllm.entrypoints.openai.api_server \

--model $MODEL_NAME --tokenizer hf-internal-testing/llama-tokenizer \

--host 0.0.0.0

curl -L $(sky serve status vllm --endpoint)/v1/completions \

-H "Content-Type: application/json" \

-d '{

"model": "meta-llama/Llama-2-7b-chat-hf",

"prompt": "San Francisco is a",

"max_tokens": 7,

"temperature": 0

}'

# Example Output:

{"id":"cmpl-6e3b960a5e7b47dbadb8846e76aef87d","object":"text_completion","created":1479,"model":"meta-llama/Llama-2-7b-chat-hf","choices":[{"index":0,"text":" city in Northern California that is known","logprobs":null,"finish_reason":"length"}],"usage":{"prompt_tokens":5,"total_tokens":12,"completion_tokens":7}}

Serving Multimodel Model LLaVA with SGLang

sky serve up -n sglang sglang.yaml

Click to see sglang.yaml and curl command

Learn more here.

# SkyServe YAML for SGLang

#

# Usage:

# set your HF_TOKEN in the envs section or pass it in CLI

# sky serve up -n sglang sglang.yaml --env HF_TOKEN=<your-huggingface-token>

# Then visit the endpoint printed in the console. You could also

# check the endpoint by running:

# sky serve status --endpoint sglang

service:

# Specifying the path to the endpoint to check the readiness of the service.

readiness_probe: /health

# How many replicas to manage.

replicas: 2

envs:

MODEL_NAME: liuhaotian/llava-v1.6-vicuna-7b

TOKENIZER_NAME: llava-hf/llava-1.5-7b-hf

resources:

accelerators: {L4:1, A10G:1, A10:1, A100:1, A100-80GB:1}

ports:

- 8000

setup: |

conda activate sglang

if [ $? -ne 0 ]; then

conda create -n sglang python=3.10 -y

conda activate sglang

fi

pip list | grep sglang || pip install "sglang[all]"

pip list | grep transformers || pip install transformers==4.37.2

run: |

conda activate sglang

echo 'Starting sglang openai api server...'

export PATH=$PATH:/sbin/

python -m sglang.launch_server --model-path $MODEL_NAME \

--tokenizer-path $TOKENIZER_NAME \

--chat-template vicuna_v1.1 \

--host 0.0.0.0 --port 8000

curl -L $(sky serve status sglang --endpoint)/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "liuhaotian/llava-v1.6-vicuna-7b",

"messages": [

{

"role": "user",

"content": [

{"type": "text", "text": "Describe this image"},

{

"type": "image_url",

"image_url": {

"url": "https://raw.githubusercontent.com/sgl-project/sglang/main/examples/quick_start/images/cat.jpeg"

}

}

]

}

]

}'

# Example Output:

{"id":"eea519a1e8a6496fa449d3b96a3c99c1","object":"chat.completion","created":1707709646,"model":"liuhaotian/llava-v1.6-vicuna-7b","choices":[{"index":0,"message":{"role":"assistant","content":" This is an image of an anthropomorphic cat character wearing sunglass"},"finish_reason":null}],"usage":{"prompt_tokens":46,"total_tokens":2205,"completion_tokens":2159}}

Self-Hosted Light-Weight Code Assistant in VSCode with Tabby

sky serve up -n tabby tabby.yaml

Click to see tabby.yaml and curl command

Learn more here.

# SkyServe YAML for code assistant in VSCode with Tabby

#

# Usage:

# sky serve up -n tabby tabby.yaml

# Then visit the endpoint printed in the console. You could also

# check the endpoint by running:

# sky serve status --endpoint tabby

service:

readiness_probe: /v1/health

replicas: 2

resources:

ports: 8080

accelerators: {T4:1, L4:1, A100:1, A10G:1}

run: |

docker run --gpus all -p 8080:8080 -v ~/.tabby:/data \

tabbyml/tabby \

serve --model TabbyML/StarCoder-1B --device cuda

curl -L $(sky serve status tabby --endpoint)/v1/completions \

-X POST \

-H "Content-Type: application/json" \

-d '{

"language": "python",

"segments": {

"prefix": "def fib(n):\n ",

"suffix": "\n return fib(n - 1) + fib(n - 2)"

}

}'

# Example Output:

{"id":"cmpl-7305a984-429a-4342-bf95-3d47f668c8cd","choices":[{"index":0,"text":" if n == 0:\n return 0\n if n == 1:\n return 1"}]}

Scaling Multi-LoRA Inference Server Using LoRAX

sky serve up -n lorax lorax.yaml [--env MODEL_ID=mistralai/Mistral-7B-Instruct-v0.1]

Click to see lorax.yaml and curl command

Learn more here.

# SkyServe YAML for multi-LoRA inference server using LoRAX

#

# Usage:

# sky serve up -n lorax lorax.yaml

# Then visit the endpoint printed in the console. You could also

# check the endpoint by running:

# sky serve status --endpoint lorax

envs:

MODEL_ID: mistralai/Mistral-7B-Instruct-v0.1

service:

readiness_probe:

path: /generate

post_data:

inputs: "[INST] Natalia sold clips to 48 of her friends in April, and then she sold half as many clips in May. How many clips did Natalia sell altogether in April and May? [/INST]"

parameters:

max_new_tokens: 64

adapter_id: vineetsharma/qlora-adapter-Mistral-7B-Instruct-v0.1-gsm8k

replicas: 2

resources:

accelerators: {L4:1, A100:1, A10G:1}

memory: 32+

ports:

- 8080

run: |

docker run --gpus all --shm-size 1g -p 8080:80 -v ~/data:/data \

ghcr.io/predibase/lorax:latest \

--model-id $MODEL_ID

curl -L $(sky serve status lorax --endpoint)/generate \

-X POST \

-H 'Content-Type: application/json' \

-d '{

"inputs": "[INST] Natalia sold clips to 48 of her friends in April, and then she sold half as many clips in May. How many clips did Natalia sell altogether in April and May? [/INST]",

"parameters": {

"max_new_tokens": 64,

"adapter_id": "vineetsharma/qlora-adapter-Mistral-7B-Instruct-v0.1-gsm8k"

}

}'

# Example Output:

{"generated_text":"Natalia sold 48/2 = <<48/2=24>>24 clips in May.\nIn total, Natalia sold 48 + 24 = <<48+24=72>>72 clips in April and May.\n#### 72"}

SkyServe Roadmap

There are many exciting improvements on SkyServe’s roadmap:

- Customizable load-balancing and autoscaling

- AI clouds support (Lambda, RunPod) for 3x-4x cost saving

- More advanced Spot Policy - A Policy that offers > 50% cost saving using spot instances, while guaranteeing/improving service quality at the same time

- More advanced Multiple Accelerators support - Routing traffic to different accelerators for better cost-efficiency

Please stay tuned for these updates, and give us feedback by joining our Slack or filing issues on our GitHub repo. Come and join our SkyPilot open-source community to make AI workload much easier on any cloud!

Learn More

Join our slack to chat with the SkyPilot community!

The graph shows that going from a single zone to multiple regions increased the availability (the percentage of time that can launch a Spot V100 instance on AWS) from 59% to 100%. ↩︎

Based on the Spot availability, assuming we have to use OnDemand instances when Spot instances are not available, the cost saving is around 2.4x. ↩︎

Because probes may fail due to network congestion or overloaded computation, SkyServe won’t restart the replica immediately. If the probe fails for a specified period of time, SkyServe will terminate it and launch a new replica for you. ↩︎